Xerox PARC and the Origins of GUI

Before Graphical User Interface (GUI) came along, using a personal computer was outrageously clunky.

To do anything, you’d type text commands into a command-line interface. You had to know the correct thing to write. And if you made a spelling mistake, well sir, you’d need to re-type it.

GUI allowed computing to go mainstream. Indeed, it’s one of the major reasons we spend so much time on our devices today.

The origin story of GUI dates back to the 1970s. And it features no shortage of corporate skullduggery and missed opportunities.

GUI was pioneered by computer engineers at the Xerox Palo Alto Research Center (aka. PARC). But it was ultimately Apple and Microsoft who reaped the benefits, and brought GUI to the masses.

Where did the idea for GUI come from?

photo: Xerox PARC

photo: Xerox PARC

GUI (pronounced “gooey”) uses a computer’s processing capabilities to display information. And to allow the user to access said information with greater ease.

GUI offers a drag-and-drop user interface. With visual representations of files and file structures for navigation.

Before we had a visual language for getting around computers, we had codes and commands.

Launched in 1980, MS-DOS is perhaps the best known example of a command-line interface OS. With its iconic UI of white-grey text on a black background.

MS-DOS organized your hard drive’s content in a file system and allowed for the reading and writing of files. It did this in the same manner as any contemporary OS. Just without any visuals whatsoever.

This compact, basic OS had a very long life—support for MS-DOS was finally discontinued in 2001. Early versions of Microsoft Windows were actually all based on MS-DOS, with the human-friendly GUI interface built on top of the text-only OS (Windows ME, released in 2000, was the last version based on DOS).

Before Xerox PARC researchers got to work in the 70s, the basic idea of GUI had been circulating for some time. Indeed, the idea of making computers more visual, more tactile, and more accessible was explored by several visionaries.

In the 60s, computer pioneer Douglas Engelbart had conceptualized GUI. He envisioned a computer system that used hypertext, window-based OS, and videoconferencing. He also came up with the concept for the computer mouse.

Engelbart did this work at the Stanford Research Institute (SRI). The GUI prototype he designed was called the oN-Line system (NLS). NLS presented a brand new idea for a computerized information system.

He showed it off during his 1968 ‘Mother of all Demos’ in San Francisco.

That same year, computer scientist Alan Kay—who went on to work at Xerox PARC— defended his Ph.D. thesis about a new, visually-oriented computer programming language. Entitled FLEX: A Flexible Extendable Language, his work focused on making it easier for users to program computers themselves. In doing so, he gestured towards a future of mass computer literacy.

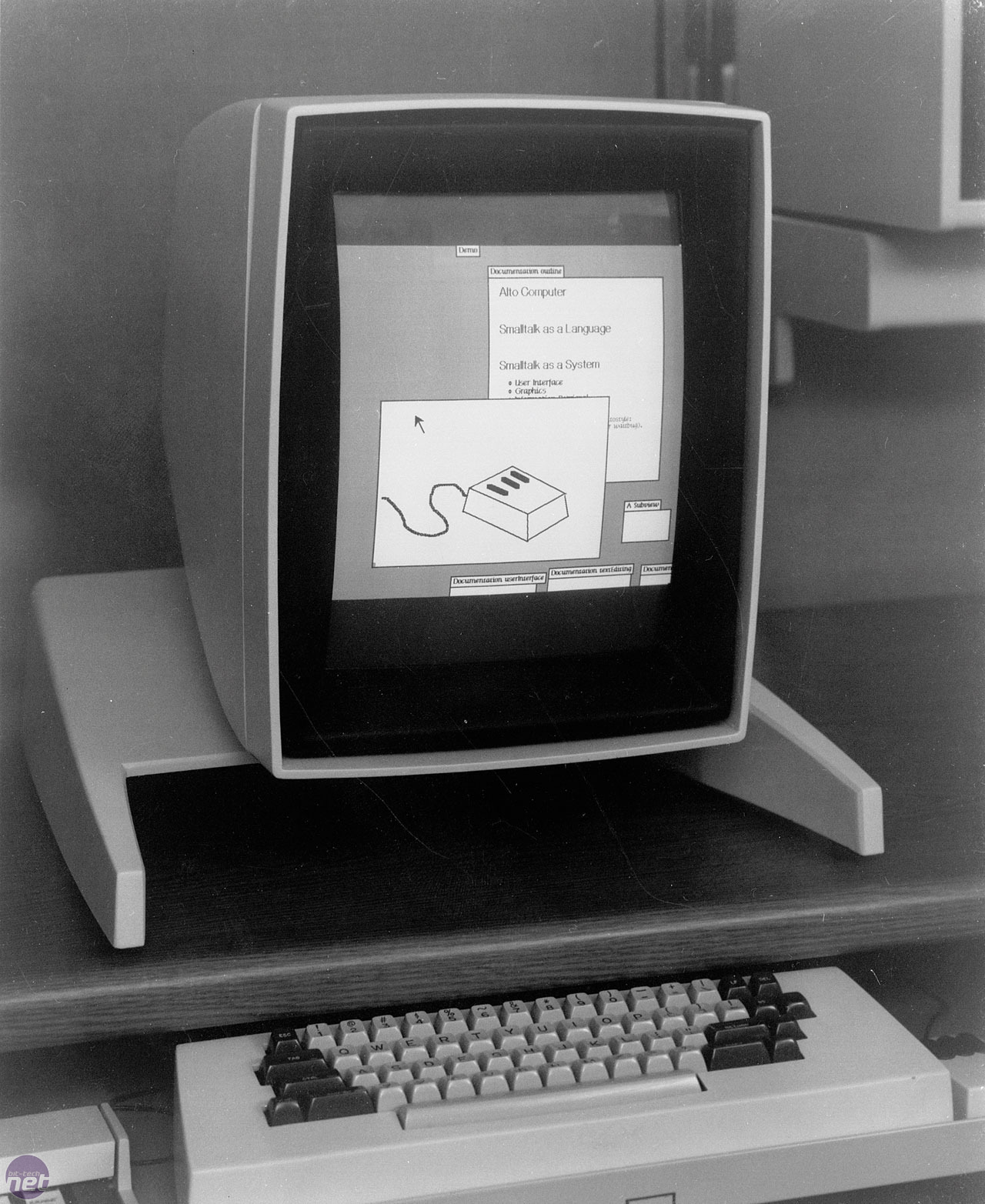

Kay’s interest in making computers accessible would culminate in the object-oriented programming language known as Smalltalk.

Smalltalk was developed at PARC in the early 70s. It was targeted at children in particular.

Xerox PARC

But conceptualizations and big picture thinking aside, Xerox PARC was where GUI finally found its way into a working product.

Opening its doors in the summer of 1970, the Xerox PARC research facility was conceived as a place where future tech was researched.

Xerox put unknown-yet-vital tech pioneer Bob Taylor in charge of PARC’s Computer Science Laboratory (he led in this role from 1970 to 1983). Taylor had been the former director of DARPA’s Information Processing Techniques Office.

They also hired some of the best and brightest computer engineers, scientists, and programmers around. Being in close proximity to Stanford University helped.

PARC quickly went on to change the world of computing, and the world in general, developing many of the technologies we use daily.

Engineer Lynn Conway joined PARC in 1973 after working for IBM. While there she co-developed the VLSI (very large scale integration) project with Carver Mead of Caltech. VLSI paved the way for CAD technology, BSD Unix, and more complexity in computing design.

Ethernet was also invented at PARC, by innovator Bob Metcalfe.

PARC alumni played a big role in developing the first laptop ever, the GriD Compass. Launched in 1982, it was built by a team overseen by John Ellenby and other former Xerox PARC engineers.

More pertinent to the topic at hand, in 1973, PARC’s team developed the Xerox Alto ‘personal’ computer. It supported bit-mapped display, and window and icon-based GUI-based operating system, and WYSIWYG (What You See is What You Get) editor. It also had a mouse and keyboard, and an ethernet cable port.

Over the rest of the 70s, this computer and its GUI-based OS continued to evolve, providing the basic building blocks of personal computing.

The Xerox Alto and a major missed opportunity

The Xerox Alto, despite being nominally defined as a ‘personal’ computer, was never actually sold as a proper mass commercial product. Only universities and research institutions ever bought them.

Why is that?

To begin with, the machine cost well over $100,000 in today’s dollars. As well, only 2,000 were ever built, owing to the fact that Xerox executives viewed the product as an overly expensive workstation.

Still, Xerox had about a big head start in the PC market. If Xerox had seriously invested in shrinking cost-per-unit, it’s conceivable the company could have headed off Apple and Microsoft. They could have become become #1 in the home computing market.

Case in point, check out this 1979 commercial for the Alto.

It’s all there—cursor-based, point-and-click UI, a window-based file system, and local area network/modem support for sending your files to other computers. Indeed, the commercial shows us the office of the future.

It was the first personal computer to showcase all this now ubiquitous computing tech.

So the question is: why did Xerox management totally blow this chance to mass-market GUI?

Here’s one credible theory. There was a fundamental company culture rift between the Palo Alto facility and company HQ in Rochester, New York.

Managers were 3,000 miles away, in a more staid, traditional business hierarchy. It was hard for managers to give the alpha to the ‘left-field’ ideas of California computer hippies.

PARC scientists even coined a disparaging term for the execs—”toner heads.”

photo: Xerox PARC Computer Science Laboratory, Computer History Museum

photo: Xerox PARC Computer Science Laboratory, Computer History Museum

Xerox Corporation had become very rich making photocopying and printing products. So there was a myopic fixation on product lines in those verticals. Laser printers and copiers ruled supreme.

Today Xerox seems significantly more enlightened. For example, in 2018 they purchased the intellectual property portfolio for Thin Film. This allows them a leg up on smart packaging tech and being a big player in the Internet of Things.

Unfortunately, late 70s Xerox wasn’t on that forward-thinking tip. Laser printing was everything.

The top brass’s inertia created a huge game-opening for other Silicon Valley upstarts. Apple struck first.

The Apple of Enlightenment: Steve Jobs at Xerox PARC

The story is legendary and is often simplified to this: in 1979, Jobs offered Xerox 100,000 shares of Apple to get a comprehensive tour of PARC for Apple engineers and himself. There he discovered the magical secret of GUI. Xerox engineers didn’t realize the new technologies would be game changers.

Of course, stories like this have a way of being simplified and mythologized.

The engineers at Xerox PARC knew their work had the potential to change the world. They’d known for years.

However, unable to get support for projects, there was a steady attrition of talent out of PARC over the course of the 70s.

By the time Jobs and company visited the site, the remaining trailblazer staff was all-too-happy to share their knowledge with receptive, open-minded people, and more than a few were likely already trying to jump ship. Indeed, some had already joined Apple pre-Jobs visit, while others had fled to Microsoft and other Silicon Valley companies.

In 1981, Xerox released the Xerox Star, which featured many of the user-friendly innovations that the Apple Macintosh would be fêted for three years later (GUI with icons and folders, and object-oriented design that actually surpassed Apple’s initial effort). But like its predecessor, the Alto, it was simply way too expensive (over $45,000 in today’s dollars at launch), and failed to find a market.

Meanwhile, Jobs “put a dent in the universe” with the original Apple Macintosh OS.

The original Mac OS: Macintosh System 1

In 1984, Apple released the Macintosh in the United States. It debuted with a price tag of $2,495 (just over $5,800 today). Not exactly cheap, but doable.

And with the help of some George Orwell-inspired iconic marketing, around 250,000 were sold in the first year.

It came preloaded with Apple’s System 1 OS. The OS featured a clean, black and white, window and icon-based GUI. Centered on the traditional concept of a work desktop.

The Menu bar allowed you to access files, apps, and system features by clicking and scrolling. The ‘Finder’ app helped you...find things.

There was a calculator, an alarm clock, a notepad. And a control panel for adjusting things like volume, time/date, and mouse settings.

At the time, these features were absolutely remarkable.

Windows 1.0

One year later, in 1985, Microsoft rolled out Windows 1.0.

Microsoft was a major developer of productivity software for the Apple Macintosh, so Bill Gates and friends had gotten their hands on a beta version of the computer (and its OS) before launch. By all accounts, Gates was mesmerized, and immediately set to work developing a GUI-based OS to counter Mac’s.

Windows 1.0 worked as a graphical shell that ran on-top of MS-DOS. Among other apps, it included a notepad, a calendar, an application named “Write” for word processing, and the first iteration of MS Paint. A menu in the corner of each window allowed window management between these various apps.

Truth be told, Microsoft didn’t really perfect their take on the GUI formula until 1990, when they released the very popular Windows 3.0, but hey, you gotta start somewhere.

A lawsuit makes GUI open source

Apple sued Microsoft in 1988 after the release of Windows 2.0, which they felt had gone too far, explicitly plagiarizing their visual displays and appropriating concepts without a license.

It was a hotly contested case. But in the end, the court ultimately ruled in favor of Microsoft.

This was due to two factors.

The first was the fact that Apple had earlier concluded a copyright agreement to license Mac design features to Microsoft Windows; somehow the Apple legal team had failed to appreciate the agreement applied to all future iterations of Microsoft Windows.

The second factor was that according to the “merger doctrine,” basic ideas themselves could not be copyrighted. It was therefore legally fine to copy the “look and feel” of another program, provided that internal structures and functions were different. Apple was absolutely crestfallen.

With this landmark ruling, GUI was free to run amok.

Has GUI run its course?

The advent of GUI made computers radically more accessible to the average person, certainly in terms of ease-of-use, but probably more critically in desire-to-actually-use. With GUI, it was possible to get past the startup screen without consulting a manual. That made all the difference in the world for novice users.

Today, GUI is so embedded in our daily lives we don’t even consider it. Anyone under 40 has grown up with it as a fact of daily computer life.

That said, some critics suggest that GUI as we know it has run its course. Computing pioneer Ted Nelson, for example, thinks it’s outrageous that 40+ years later, we’re still all using Mac, Windows, and Google products that are essentially nicer versions of Xerox PARC’s original OS.

Nelson also believes that GUI, in its mainstream iteration, stymies creativity by ‘fixing’ the spatial qualities of computing and replicating old hierarchies of files and work that have existed since the 19th century.

He may have a point—and in any case, things are indeed finally, slowly moving away from GUI. We see this in multimodal UI, implemented in devices like the Amazon Echo Spot, where voice commands and GUI work in tandem.

Workaday spatial interfaces that incorporate VR and AR technology are on the horizon as well.

Spatial interface design has traditionally been the preserve of the gaming industry. But it’s entirely possible it will eventually cross over into mainstream work tools.

Obstacles include the significant amount of computing resources consumed for 3D displays. And the need to further develop hardware peripherals to make the technology mainstream practical.

In the near term, what we’re most likely to see are more so-called natural user interfaces (NUIs). Basically, more intuitive, ‘invisible’ GUIs that hide and minimize the operational complexities of UI further.

GUI is resilient. We’re super familiar with it, and the general human tendency for problem solving is to find the quickest, most efficient solution—if it ain’t broke, don’t fix it.

Still, almost half a century later, perhaps we’re ready for a little change.